Scoring & Rubric Evaluation: Turning Subjective Output Review into Measurable Quality

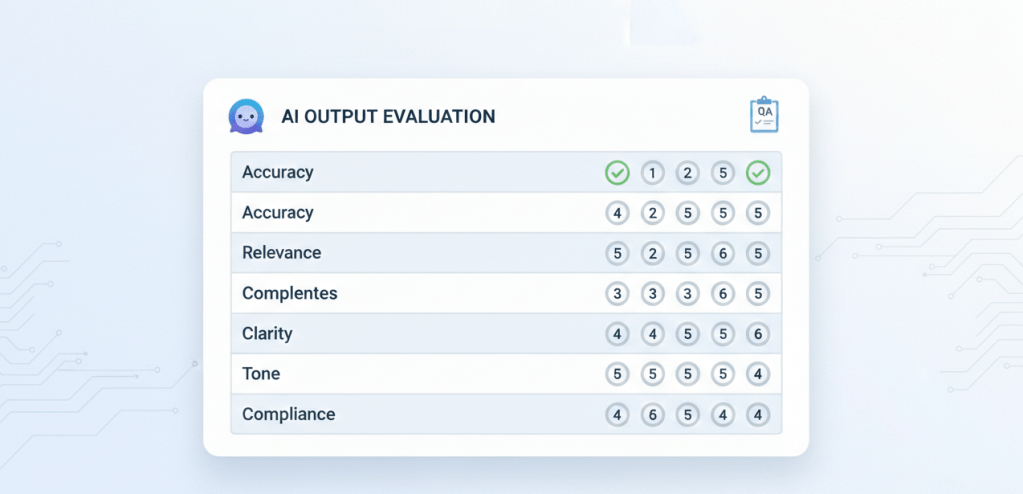

Human evaluation is unavoidable in generative AI—but human evaluation without structure becomes inconsistent, slow, and argumentative. A well-designed rubric transforms output review into a repeatable measurement system. It enables QA teams to quantify quality dimensions such as accuracy, relevance, clarity, tone, and compliance, then track improvement or regression across releases.

Objectives

- Design a rubric that fits LLM chatbot outputs and regulated domains.

- Calibrate reviewers to reduce drift and improve inter-rater reliability.

- Operationalize scoring into dashboards and release gates.

- Apply rubric scoring to age eligibility responses in insurance.

1) A rubric is a measurement instrument—treat it like one

A rubric should not be a list of “nice-to-have” qualities. It must define:

- Criteria: what is being measured (e.g., Accuracy, Compliance)

- Anchors: what “1 vs 3 vs 5” looks like in concrete terms

- Examples: known-good and known-bad outputs for calibration

In regulated settings, the rubric should give extra weight to accuracy and compliance. A chatbot that is polite but wrong is still a failure mode.

Once the rubric exists, calibration becomes the difference between consistent measurement and noisy opinion.

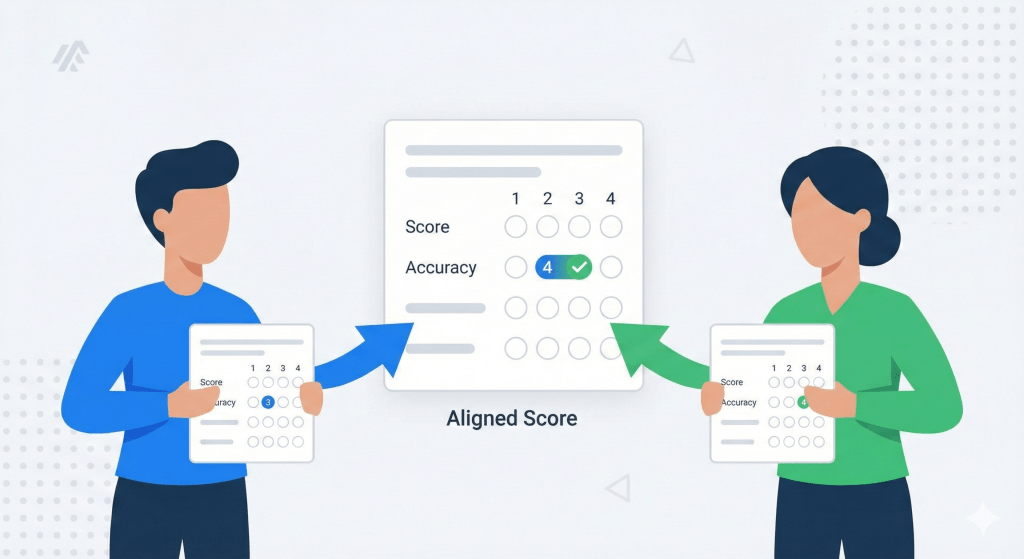

2) Calibration: achieving inter-rater reliability

Rubric scoring fails when two reviewers interpret “acceptable” differently. Calibration is the process of aligning judgments through shared examples and anchor refinement.

Practical calibration steps:

- Score a shared set of outputs (a small golden calibration pack).

- Review disagreements and refine anchors until differences converge.

- Track variance per criterion (Accuracy variance matters more than Tone variance).

- Recalibrate after major changes: model upgrades, system prompt updates, policy updates.

With calibration in place, rubric scoring becomes not only consistent but operational—supporting dashboards and release governance.

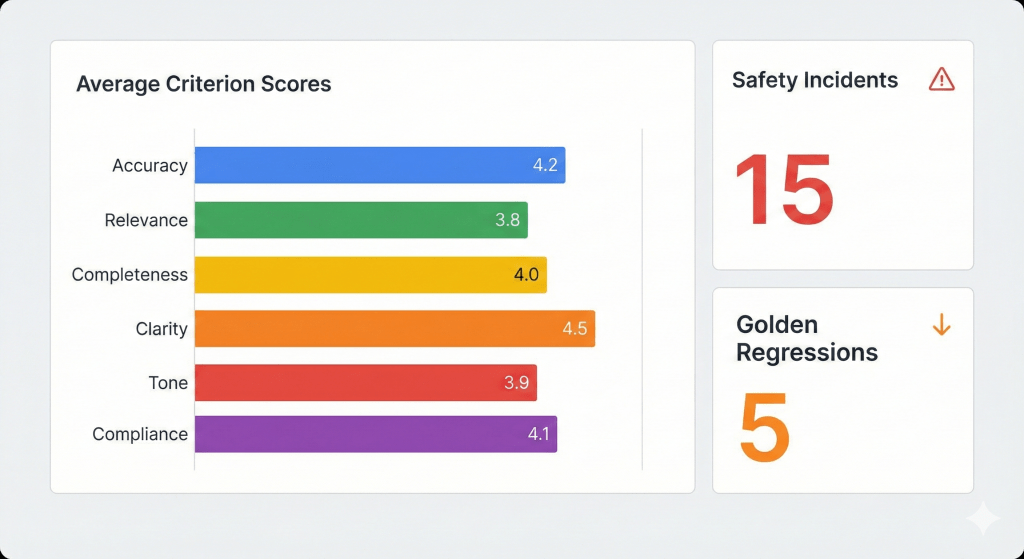

3) From scores to governance: dashboards and release gates

Rubric scores become strategically valuable when aggregated and trended. A simple dashboard can show:

- Average scores by criterion (Accuracy, Compliance, Clarity, etc.)

- Trendlines across releases (is Accuracy improving or drifting?)

- Golden set regressions (critical prompts that must not degrade)

- Safety/compliance incident counts (must be near-zero in production systems)

Example release gates:

- Accuracy ≥ 4.0 on core scenarios

- Compliance: 0 critical violations on compliance suite

- Golden regressions: 0 regressions on high-risk prompts

Next, a domain example shows how rubric scoring tolerates acceptable variation while still enforcing correctness and safety.

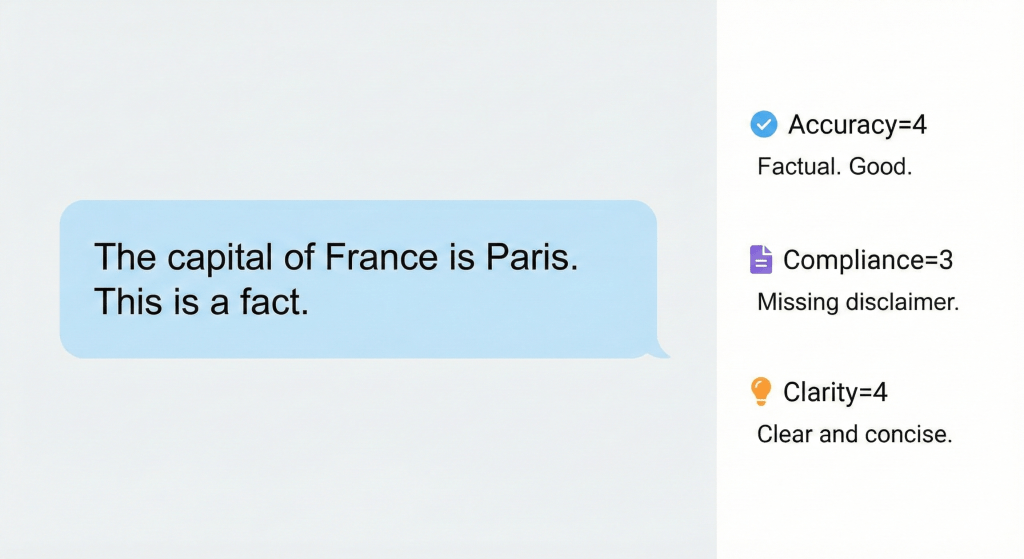

4) Case study: rubric scoring for age eligibility responses

Age eligibility prompts are excellent for rubric scoring because they reveal trade-offs between clarity, certainty, and compliance.

An expert-style review note focuses on observable behavior:

- Accuracy: 2 — asserts a maximum age without citing policy; lacks jurisdiction qualifier.

- Compliance: 3 — neutral tone but missing required disclaimer for underwriting conditions.

- Clarity: 4 — structure is readable; could add explicit next steps for confirmation.

This style of scoring creates a clean handoff to engineering: adjust retrieval/citations, add disclaimers, and refine response templates.

Conclusion

Rubric evaluation professionalizes human review. It converts subjective impressions into measurable quality signals, enables trend analysis across releases, and provides QA with a defensible foundation for go/no-go decisions—especially in regulated deployments.

References

- ISO/IEC — 25010 (software product quality model)

- Ribeiro et al. — CheckList: Beyond Accuracy in NLP Models

- Stanford CRFM — HELM (Holistic Evaluation of Language Models)

- NIST — AI Risk Management Framework (AI RMF 1.0)

- Image generated from Gemini.