Introduction

Test automation is often treated like some sort of high quality testing. The more tests you automate, the more “advanced” your team is supposed to be.

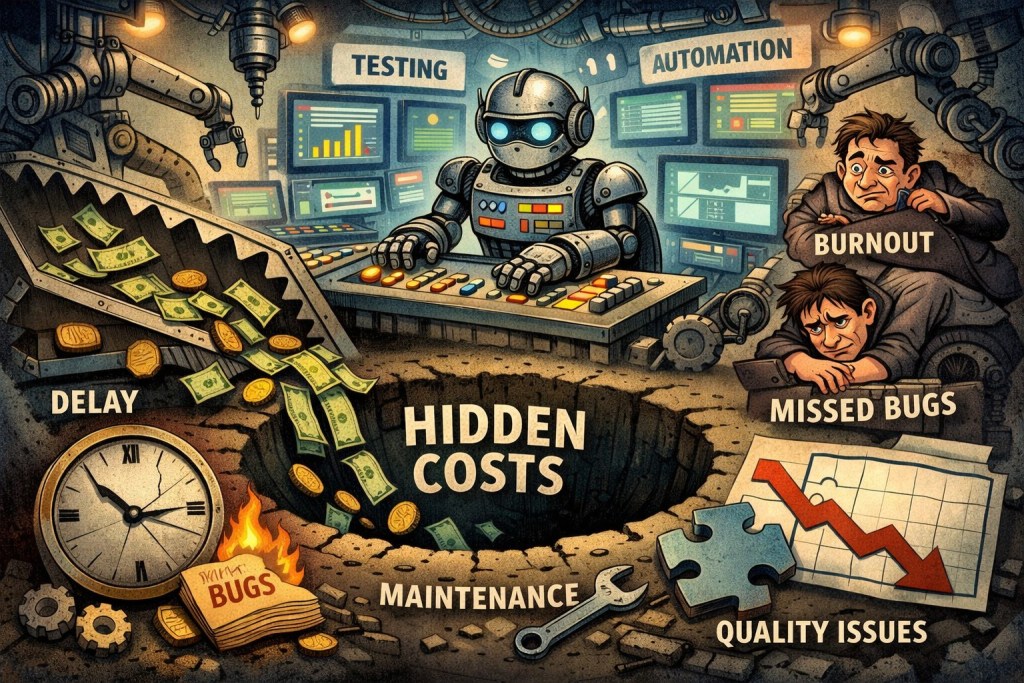

Many teams eventually reach a point where automation, instead of helping, quietly becomes a burden.

- Builds take longer.

- Tests break for unclear reasons.

- Fixing the test suite takes more time than investigating real bugs.

This is the hidden cost of over-automation and it’s far more common than people thought.

Automation Was Never Meant to Replace Manual Testing

Automation is excellent at doing the same thing repeatedly and consistently such as regression testing or smoke test.

What it’s bad at is understanding context, intent, or sudden change.

When teams try to automate everything, the test suite becomes a reflection of how the system used to work, not how it works now.

Gradually, automation starts to reinforce outdated thinking instead of challenging it.

The Illusion of Safety

A large automated test suite feels reassuring for A LOT OF people. Lots of tests passing must mean the system is healthy, right?

The truth:

- Real user behavior isn’t represented correctly

- Tests pass while production leakage still leaks through

Automation doesn’t guarantee quality. It only guarantees that something ran.

The Long-Term Cost of Automated Tests

Every automated test has a lifetime cost. Writing it is just the beginning.

Tests need to be:

- Updated when behavior changes

- Fixed when environments variable shift

- Refactored when architecture evolves

When too much is automated, maintenance quietly consumes more and more time. Teams start spending entire sprints fixing tests instead of improving the product.

At that point, automation stops saving time and starts competing with fixing timeslot.

Fragile Tests Grow Faster Than Actual Bugs

Over-automation often leads to fragile tests — especially at the UI level.

Tests that:

- Rely on exact text

- Assume specific layouts

- Follow long, detailed flows

… are extremely fragile.

Each new feature or redesign doesn’t just add complexity to the test, it multiplies the number of tests that can fail for reasons unrelated to actual defects.

Eventually, teams begin to ignore failures because “it’s probably just random.”

Speed Suffers, Even If Coverage Looks Good

As test suites grow, pipelines slow down. Feedback that once took minutes now takes hours. Developers don’t want to run tests locally.

Ironically, automation intended to speed things up can become the reason teams move slower.

Not All Failures Are Equal But Automation Treats Them That Way

Over-automated test suites often fail loudly but unhelpfully. A broken test might indicate:

- A real defect

- A trivial UI change

- A flaky environment issue

- An outdated requirement

When everything is automated, teams lose the ability to quickly distinguish between meaningful failures and noise. This slows down decision-making and erodes trust in test results.

Automation Should Follow Stability, Not Lead It

One of the biggest mistakes teams make is automating features before they’ve stabilized.

Early-stage features change rapidly. Automating them in detail locks in assumptions that won’t survive the next sprint. The result is constant rework and frustration.

Automation works best when it protects behavior that should not change, not behavior that’s still being figured out.

A Healthier Way to Think About Automation

Good automation is selective and adaptable.

It focuses on:

- Business-critical rules

- Stable workflows

- High-risk areas

- Regressions that would be costly to miss

It leaves room for:

- Human exploration

- Human judgement

- Adaptation

The goal isn’t maximum automation. It’s meaningful automation.

Measuring the Right Things

Instead of asking:

“How much have we automated?”

Try asking:

- Which failures actually matter?

- How often do tests catch real issues?

- How much time do we spend maintaining tests?

- Do we trust our test results?

These questions lead to better decisions than coverage percentages ever will.

Final Thoughts

Automation is powerful but only when used deliberately.

Over-automation doesn’t usually fail loudly. It fails quietly, through wasted time, slow feedback, and misplaced confidence.

The most effective test strategies don’t try to automate everything. They automate what’s worth protecting and leave space for human insight everywhere else.

Sometimes, doing less automation leads to better quality.